Which benchmark utilities are recommended for testing NVMe transfer performance in a Linux Environment?

- Tutorial Videos & Installation Guides

-

FAQ

FnL Product Line Determining PCIe lane assignment for your SSD7000 Controller MacOS Windows Linux SSD6200 Series Controller SSD7000 Series Controller RocketStor Series RocketRAID Series RocketU Series Motherboard Compatible Report Other Questions Standard Responses for Known Issues or Subjects WebGUI eStore Gen5

- HPT

- Compatibility Reports

- FAQ

- Workaround Issue

Overivew

The three most common benchmark utilities are FIO, HDparm and “dd”.

We used each utility with the SSD7103 to document how these tools are able to test NVMe performance.

We found that FIO was the only utility capable of acurrately testing the performance of NVMe/SAS/SATA RAID storage.

Descriptions of common Benchmarking Tools for Linux

Hdparm

Introduction

hdarm it is used to obtain, set, and test disk parameters for Linux-based systems, including the read performance and cache performance of hard disk drives.

However, hdparm was designed as a performance testing tool. It was only designed to test very low-level operations; it was not designed to consider the distinction between cache and the physical platters of a disk.

In addition, once you have created a file system on the target disk, the test results will drastically change. This is particularly noticeable when testing sequential access vs. random access.

Command Line Parameters

Using hdparm's -T and -t parameters, you can measure the basic characteristics of a disk in a Linux environment:

Format: hdparm [-CfghiIqtTvyYZ]

a. -t Evaluate the read efficiency of the hard disk.

b. -T Evaluate the read efficiency of hard disk cache Example:

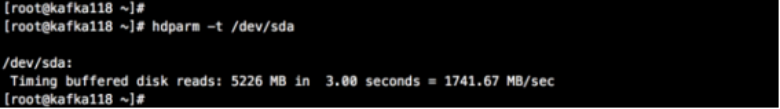

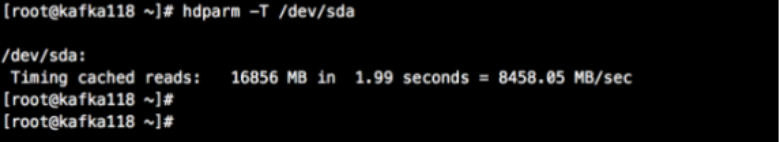

[root@kafka118 ~]# hdparm -t /dev/sda (Evaluate the read efficiency of the hard disk)

[root@kafka118 ~]# hdparm -T /dev/sda (Test the read speed of the hard disk cache)

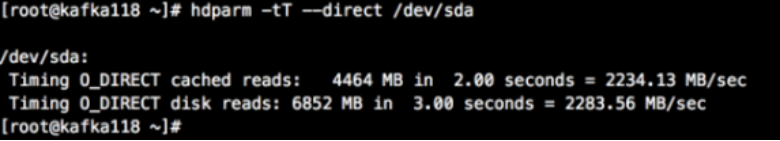

[root@kafka118 ~]# hdparm -tT --direct /dev/sda (Directly test the read performance of the hard disk)

dd

Introduction

“dd” is a command line utility for UNIX and Linux operating systems. Its main purpose is to convert and copy files.

Common Command Line Options

Option |

Description |

if= file name |

Enter the file name, specify the source file, such as <if=input file >. |

of= file name |

The output file name; the destination file is specified as <of=output file >. |

ibs=bytes |

Read in bytes at a time (specify a block size as bytes) |

cbs=bytes |

Output bytes; specify a block size in as bytes. |

skip=blocks |

Set the block size of the read/output to bytes. |

seek=blocks |

Convert bytes at a time; specify the size of the conversion buffer. |

count=blocks |

Start copying after skipping blocks from the beginning of the input file. |

Common command line format

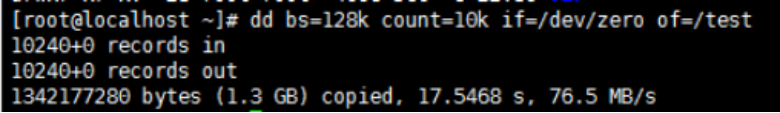

1. #dd bs=128k count=10k if=/dev/zero of=test

The default mode of dd does not include the "sync" command, and the system does not actually write the file to the disk before the dd command is completed. Simply reading the data into the memory buffer (write cache [write cache]) shows only the read speed, and the system does not actually start writing data to the disk until dd has completed.

Sample Test result:

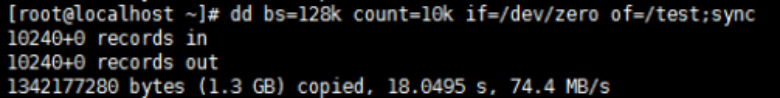

2. # dd bs=128k count=10k if=/dev/zero of=test; sync

Same as above, the two commands separated by semicolons are independent of each other,the dd command has already displayed the "writing speed" value on screen, and the real-world write speed cannot be obtained.

Sample run results:

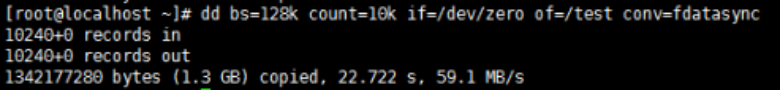

3. # dd bs=128k count=10k if=/dev/zero of=test conv=fdatasync

At the end of the dd command execution, it will actually perform a "sync" operation, and what you get is the time required to read the 128M data to the memory and write it to the disk. This is more in line with the real-world transfer.

Sample run results:

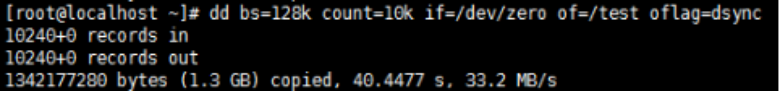

4. # dd bs=128k count=10k if=/dev/zero of=test oflag=dsync

Same as above, the two commands separated by semicolons are independent of each other ,the dd command has already displayed the "writing speed" value on the screen, and the real writing speed cannot be obtained. dd will perform a synchronous write operation every time when it is executed. After each 128k read, the 128k must be written to the disk, and then the following 128k is read. It is the slowest way, and it is basically not used. Write cache

Sample test results:

Testing the benchmark Utilities

Test environment

Motherboard |

GIGABYTE X570 AORUS MASTER |

CPU |

AMD Ryzen 93900X 12-Core Processor |

Memory |

Kingston HYPERX RURX DDR4 3200MHz |

BIOS |

1201 |

Slot |

PCIE x16_1 |

OS |

Ubuntu Desktop 20.04.1(kernel:) |

HBA |

SSD7103 |

SSD |

Samsung 970 Pro 512GB*4 |

Driver |

https://highpoint-tech.com/BIOS_Driver/NVMe/HighPoint_NVMe_G5_Linux_Src_v1.2.24_2021_01_05.tar.gz |

WebGUI |

https://highpoint-tech.com/BIOS_Driver/NVMe/SSD7540/RAID_Manage_Linux_v2.3.15_20_04_17.tgz |

Scripts |

Fio: #fio --filename=/mnt/test.bin --direct=1 --rw=read --ioengine=libaio --bs=2m --iodepth=64 --size=10G --numjobs=1 --runtime=60 --time_base=1 --group_reporting --name=test-seq-read #fio --filename=/mnt/test.bin --direct=1 --rw=write --ioengine=libaio --bs=2m --iodepth=64 --size=10G --numjobs=1 --runtime=60 --time_base=1 --group_reporting --name=test-seq-write |

Test Procedure

- 1. Prepare the motherboard, OS, and SSD7103 with 4 NVMEs, and set up the test environment;

- 2. Install the driver and WebGUI, and restart the system;

- 3. Format a single disk for partition and mount it;

- 4. Use FIO to test document abse performance;

- 5. Umount partition, using HDPARM to test its performance;

- 6. Create RAID0 and RAID1 respectively and repeat steps iii. to v. above.

Test Results

|

Result Tool |

Category |

HPT Single Disk |

HPT RAID0 |

HPT RAID1 |

Fio |

2M-Seq-Read(MB/s) |

3577 |

14300 |

7143 |

2M-Seq-Write(MB/s) |

2319 |

9211 |

2299 |

|

4K-Rand-Read(IOPS) |

377K |

1318K |

753K |

|

4K-Rand-Write(IOPS) |

331K |

312K |

262K |

|

| ||||

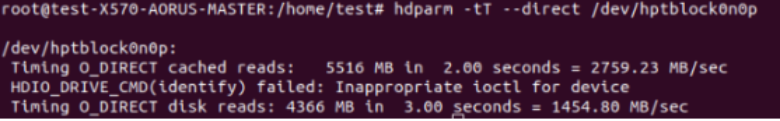

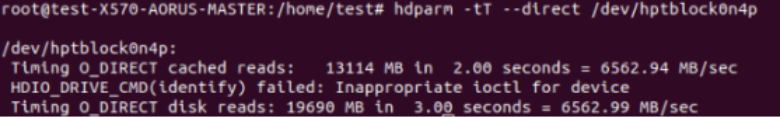

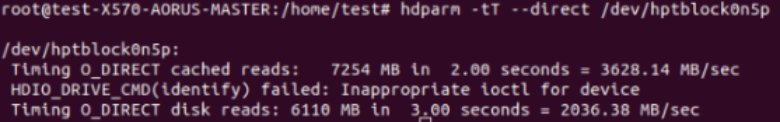

Hdparm |

Cached Reads(MB/s) |

2759.23 |

6562.94 |

3628.14 |

Reads (MB/s) |

1454.80 |

6562.99 |

2036.38 |

|

| ||||

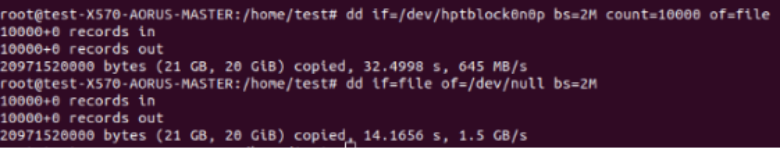

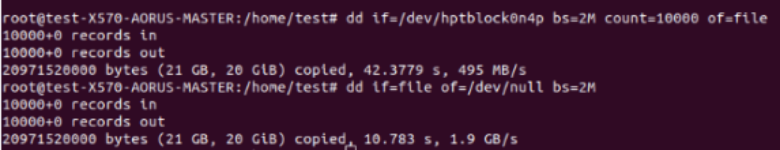

dd |

2M-Read(MB/s) |

1500 |

1900 |

1300 |

Hdparm :

dd:

Test analysis

Comparison of Hdparm and fio

- 1. Hdparm has few parameters for testing performance

- 2. Hdparm is normally used to read and set hard disks, without considering the distinction between cache and actual physical read performance.

Comparison of dd and fio

- 1. When using dd to test the maximum read bandwidth, the queue depth is always very small (not greater than 2), so the test results of dd are much lower than those reported by fio.

- 2. When using the dd command, if you set iflag=direct, the queue depth is 1, the test result is basically the same as the fio test result. Therefore, if you need to test the delay, bandwidth, and IOPS of a single queue, you can consider using the dd command;

- 3. When using the dd command, if the value of iflag is sync, nocache, or when the iflag parameter is not used, the actual IO block size is 128KB, regardless of the value set by the bs parameter.

- 4. dd cannot set the queue depth and cannot obtain the maximum read IOPS.

We do not recommend using dd to test performance for the following reasons:

- 1. The IO model of the dd command is single, which can only test sequential IO, not random IO;

- 2. The dd command can set fewer parameters, and the test results may not reflect the real performance of the disk;

- 3. The original intention of the dd was not to test the performance (please refer to the official dd documentation for more information).

Conclusion

“dd” and hdparm are not suited for testing NVMe performance in a Linux environment. We recommend customers use fio.

Table of Contents